10 Times More Memory Than Previously Calculated

Researchers from Salk Institute and the University of Austin, Texas have discovered that the memory capacity of the brain is 10 times higher than previously estimated. By examining the size of neural connections, the neuroscientists were also able to understand why brains are so energetically efficient. The study, published in eLife, will help create more potent and efficient computers.

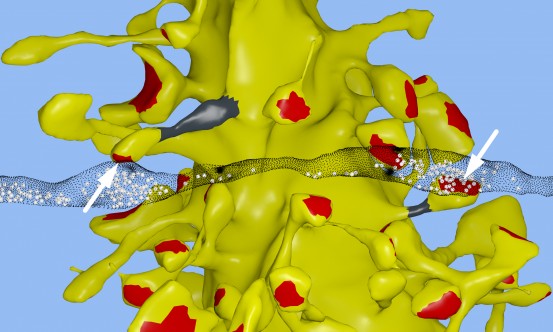

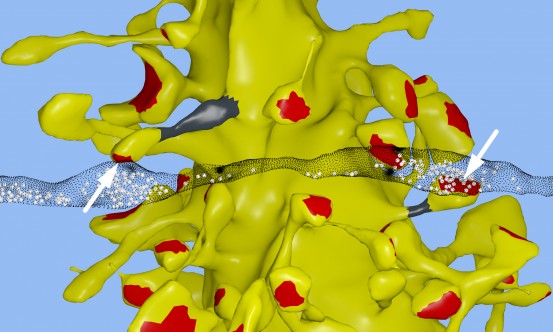

Neurons transport information in the form of electrical and chemical signals. Usually, neurotransmitters are released from the axon of one neuron to the dendrite of another neuron. Each of these junctions, called synapses, can connect up to thousands of neurons. Synapse malfunction can cause neurological diseases. The salk team was reconstructing a rat hipoccampus when found a single axon forming two connections with one dendrite. Although this is relatively common, the researchers didn’t expect to be able to differentiate two adjacent synapses to such detail. Based on this finding, the teams of Terry Sejnowski and Kristen Harris decided to do a 3D reconstruction of brain synapses to better understand their complexity and diversity.

26 sizes of synapses

Using electron microscopy, the teams reconstructed the connectivity and shape of the different types of synapses that occur in the brain of a rat. The pairs of synapses were almost equal in size, with only an 8% difference. This new knowledge, combined with the already known 60 fold difference in synapse sizes, means that there are 26 discrete sizes, much more than expected. These sizes correspond, in computer terms, to 4.7 bits of information, an order of magnitude more than previously calculated. The researchers also discovered how the brain can be precise with only a 10-20% of synapses actually delivering a message. Synapses constantly adjust their size (and subsequent ability to transmit a signal) based on previous delivery failures and successes.

These findings will have implications in the study of memory and learning. Besides, the low energy consumption of the brain, its high capacity (on the order of the petabyte) and the probabilistic transmission of neurotransmitters open the door to rethink computer design to make them more efficient and precise.

Source: Salk